Loyal Agents

We’re building a trustworthy marketplace of consumer-authorized AI agents.

A collaboration with the Consumer Reports Innovation Lab.

Consumer Reports Innovation Lab + Stanford Digital Economy Lab

AI agents are rapidly becoming the layer through which people discover products, make decisions, and transact.

As these systems gain autonomy, a central risk emerges: agents can be optimized for vendors, platforms, or hidden incentives rather than the people they represent.

The Loyal Agents initiative exists to make agentic AI loyal by design, meaning agents act transparently, securely, and in the consumer’s best interests, even when identity, money, or high-stakes decisions are involved.

Loyal Agents is a joint applied-research and prototyping collaboration between Consumer Reports (CR) Innovation Lab and Stanford University’s Digital Economy Lab (DEL).

Why this matters now

Agentic AI is shifting from assistive chat to delegated action — buying, switching services, negotiating, troubleshooting, and managing personal data on a user’s behalf. For commerce and CX platforms, this is the start of a new interface layer: consumers will increasingly arrive with an agent that expects to act, not just browse.

This transition creates two urgent requirements for the market:

- Trust rails for agentic transactions: Consumers and regulators will demand that AI-delegated actions are safe, auditable, and explicitly authorized.

- Clear duties and predictable rules for platforms: Enterprises need practical standards defining loyalty, disclosures, and accountability, so deployment risk is manageable.

Without shared guardrails, agents risk repeating web-era harms (opaque steering, conflicts of interest, dark patterns, privacy leakage), now amplified by autonomy. Loyal Agents is building the foundation to prevent that outcome and unlock the upside.

If we can get AI agents right, they could have incredibly positive transformative effects on business and the broader economy.

Duty of Care and Duty of Loyalty

Duty of Care is the legal obligation to exercise a reasonable standard of care to avoid causing foreseeable harm to others.

Similarly, Duty of Loyalty is a fiduciary requirement for individuals like corporate directors, officers, and employees to act in the best interest of the company over their own personal interests.

Benchmarking and Evaluation (Agent Ratings)

With thousands of agents already available, users need to know the performance and level of legal duty (care, loyalty, etc) achieved through those agents.

This project will develop an open neutral rating service for qualifying agent capabilities and performance. Using LLM-as-Judge, it will also build, test, and evaluate “loyalty” in sandboxes.

Human Context Protocol

Human Context Protocol, or HCP, defines a protocol to connect LLM clients (such as ChatGPT) and LLM Memory Managers.

This project aims to create a reference implementation of the HCP Server, or PCR, and use that server to carry out a handful of use cases.

Authentication for AI Agents (Privacy and Security)

Agentic commerce requires a clear, revocable model of delegated authority, identity, authentication, and permissions.

We are producing a practical proposal suitable for standardization and real pilots.

Would you like to get involved?

For more information on the project, participation, and to sign up to receive e-mail updates, please visit the Loyal Agents website.

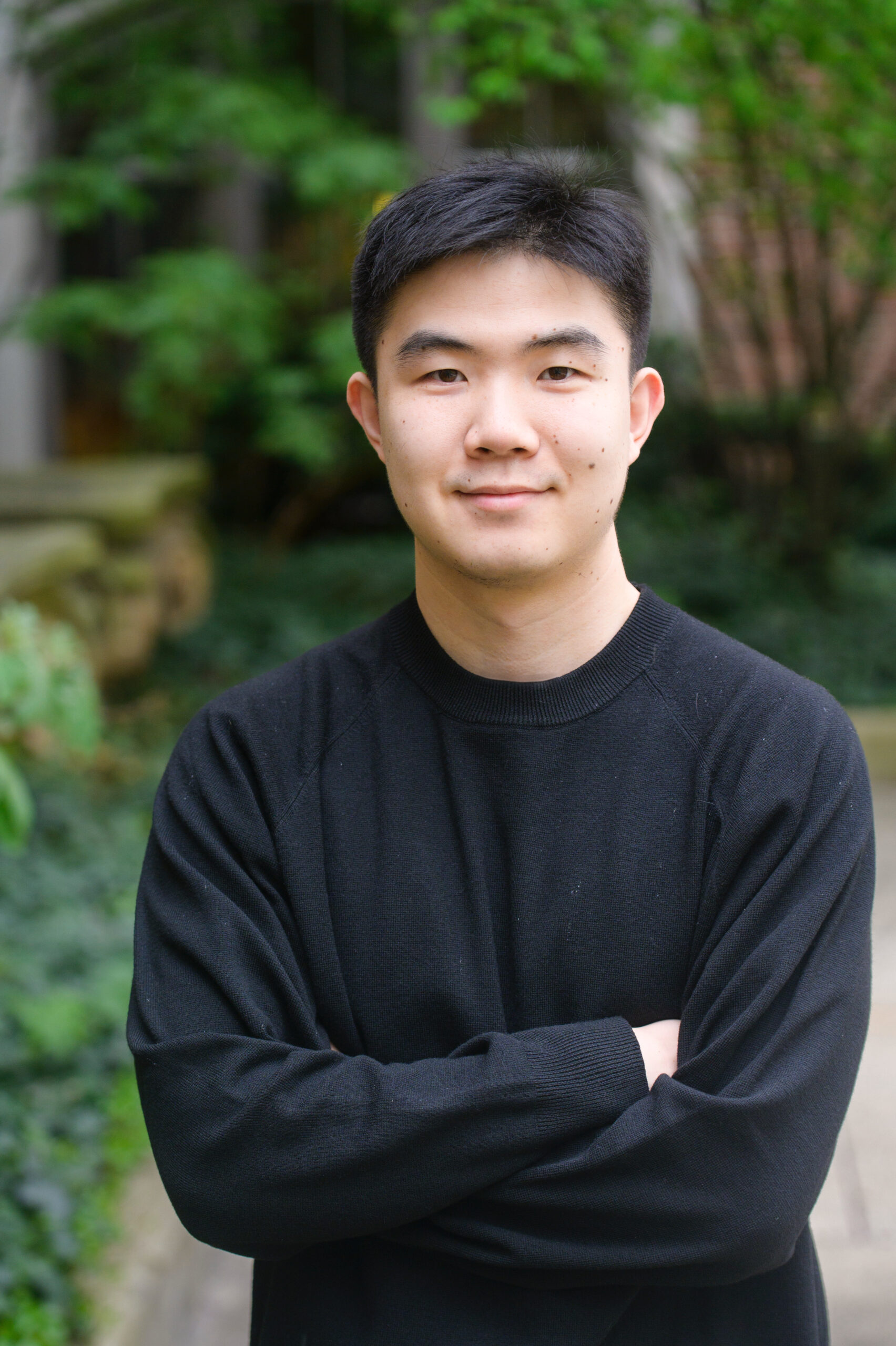

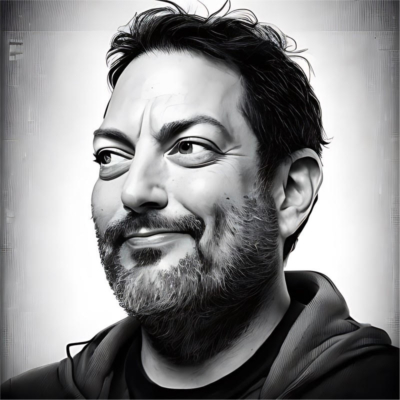

Go to LoyalAgents.orgLoyal Agents Team

Dazza Greenwood

Founder at Civics.com and Project Lead at Stanford CodeX